Large Language Models (LLMs) are gaining attention in Network Operations Center (NOC) teams, but the value isn’t always obvious. This blog looks at how LLMs help NOC analysts in real operational settings, the challenges of using LLMs in NOC environments, and whether they truly change how NOCs operate or simply add another layer of complexity in AI in network operations.

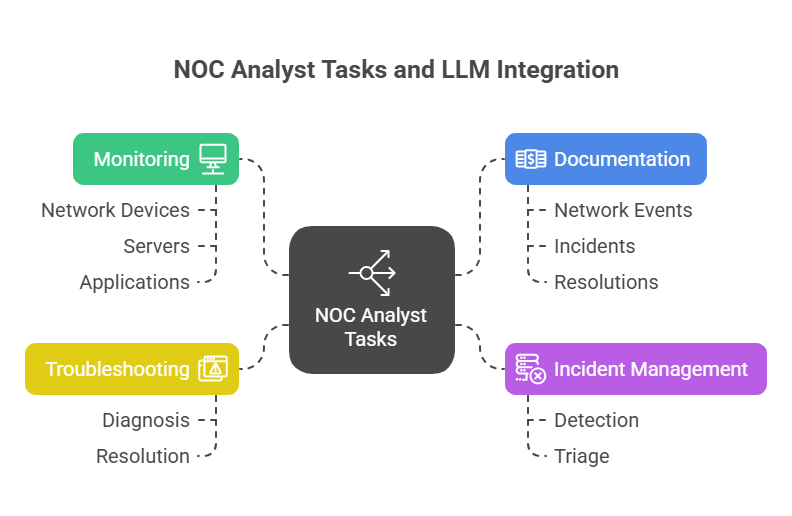

In most enterprises, the NOC is the first place trouble shows up. When a service slows down or something breaks, it ends up with the NOC team. Analysts move between dashboards, alerts, and logs all day, trying to figure out what actually needs attention before users or business teams start to feel the impact.

That work has only become harder. Modern environments are spread across cloud, on-prem, and third-party platforms. Signals arrive from everywhere, alerts stack up quickly, and telling a real incident apart from background noise often takes more time than teams can afford. This pressure is why network monitoring automation and network operations center automation are no longer seen as nice-to-have improvements, but as necessary steps to keep operations under control.

Over the last year or so, LLMs have started coming up more often in day-to-day operational discussions. Not as formal projects, but as tools teams are curious about and quietly experimenting with. The interest is straightforward. They can sift through long blocks of text, highlight what’s relevant, and put it into language people can quickly understand, something most monitoring tools were never built to handle.

As alert volumes continue to grow, especially in busy NOCs, that capability has drawn attention. For teams dealing with constant alert fatigue, the idea of using AI in network operations to cut through the noise has become hard to ignore.

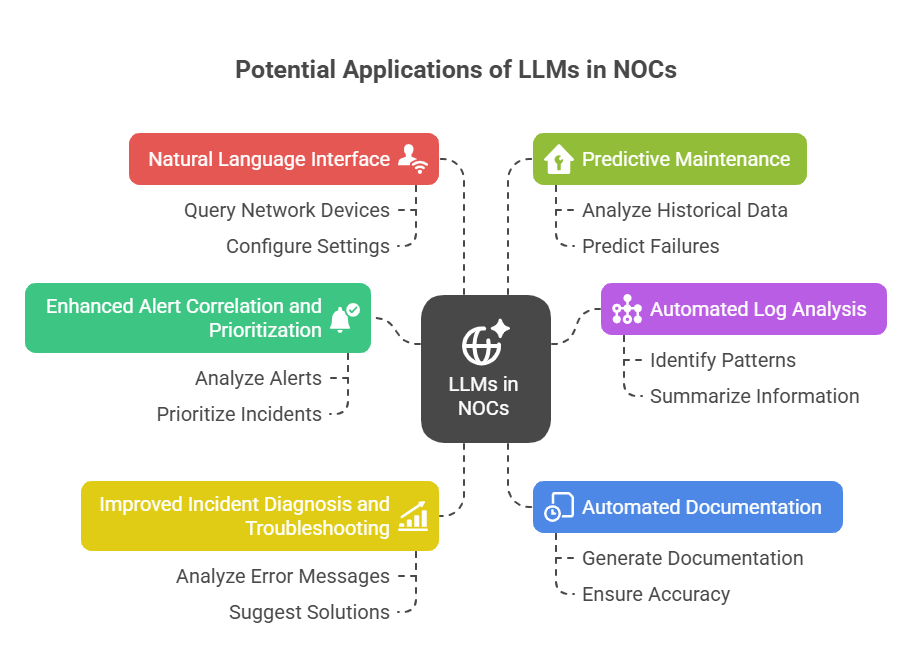

Potential Applications of LLMs in NOCs

Challenges and Limitations

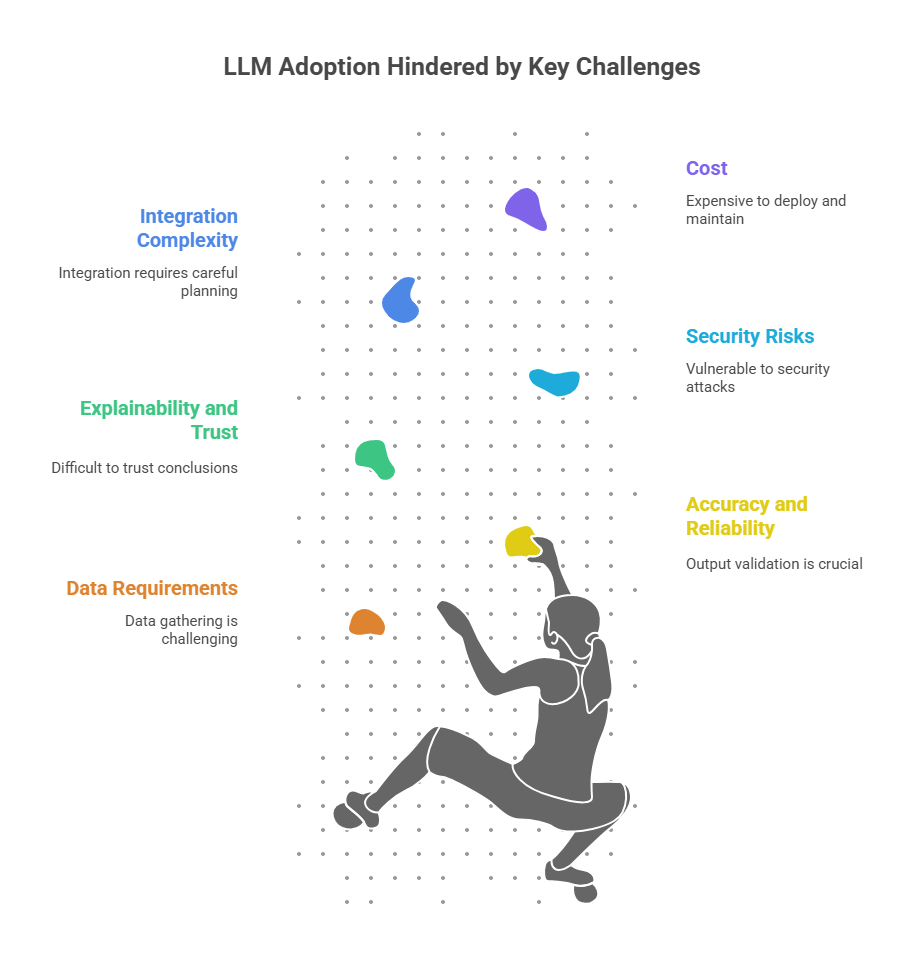

Despite their potential benefits, LLMs also face several challenges and limitations that may hinder their widespread adoption in NOCs. These limitations of using LLM in NOC environments become more pronounced as scale and criticality increase.

- Data Requirements: Need large, relevant datasets.

- Accuracy & Reliability: Risk of errors; outputs must be validated, raising risks of using LLMs in IT operations.

- Explainability & Trust: Black-box nature reduces confidence.

- Security Risks: Vulnerable to attacks like prompt injection.

- Integration Complexity: Hard to fit into existing workflows.

- Cost: High expense for development, deployment, and maintenance.

Where LLMs Succeed in Real NOC Environments

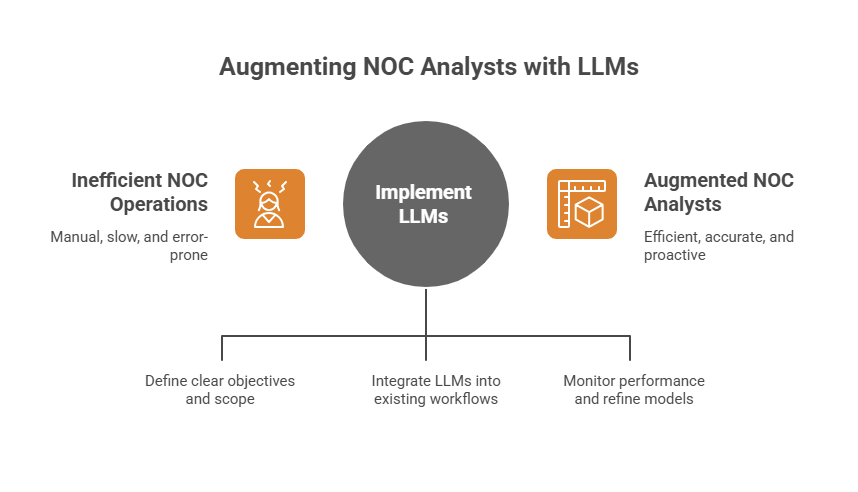

In real NOC environments, LLMs tend to work only when the basics are already in place. Teams need consistent monitoring, cleaner signals, and clear ownership of what happens when something breaks. From running enterprise networks across on-prem and cloud environments, Jade Global has seen that LLMs are most useful when they support existing workflows rather than sit on top of them. When monitoring is unified and noise is already under control through network monitoring automation, LLMs can help analysts move faster.

Game Changer or Gimmick?

LLMs can be a game-changer for NOC analysts if applied to the right use cases, with quality data and seamless integration. This leads many teams to ask: should enterprises use LLMs in NOC operations, and if so, where do they draw the line?

Currently, LLMs are not a complete replacement for NOC analysts. This raises a more direct question: Do LLMs replace NOC analysts, or do they simply shift how analysts interact with alerts, incidents, and operational data? Well, the answer is - they are best used as tools to augment and enhance the capabilities of human analysts. By automating repetitive tasks, providing insights, and assisting with troubleshooting, LLMs can free up NOC analysts to focus on more complex and strategic issues.

Why This Matters to NOC Decision-Makers

For Network Operations leaders, IT Operations leaders, and CIOs, LLMs are no longer something to test in isolation. The real concern is whether they help teams keep services stable as networks become more distributed and harder to manage.

NOC leaders carry direct responsibility for uptime, SLA commitments, operating cost, and risk. Any new capability is judged on practical impact: does it shorten incident resolution, cut through alert fatigue, and allow teams to scale without adding headcount at the same pace? In simple terms, does it reduce Mean Time to Resolution (MTTR), help teams focus on real issues, and avoid introducing new operational or governance risk?

Well, the value of LLMs in NOC environments is less about advanced intelligence and more about speed and clarity. Used efficiently, they help analysts bring together alerts, logs, and historical context much faster than manual investigation allows.

That speed shows up in everyday operations. Triage cycles shorten, handoffs across shifts become more consistent, and MTTR improves in measurable ways. For organizations that already invest in network monitoring automation and network operations center automation, LLMs tend to strengthen those foundations by making existing operational data easier to act on, rather than by adding yet another layer of tools.

What NOC Leaders Should Evaluate Before Adoption

Before bringing LLMs into a NOC, it’s worth being honest about how ready the operation actually is. If alerts are messy or day-to-day workflows change depending on who’s on shift, an LLM won’t clean that up. More often, it just makes the cracks show faster.

This is usually the point where teams realize they need to step back and check how things really work today. Ownership during incidents, how escalations play out outside business hours, and how decisions are verified at the moment all start to matter very quickly. Speed alone isn’t enough if security, governance, and control aren’t clearly defined around operational data.

There’s also a practical reality around payoff. If an LLM introduces more work to integrate and maintain, but doesn’t noticeably reduce MTTR or operating cost, the value drops off fast. In many cases, the issue isn’t the technology itself, but that the organization isn’t ready to absorb it yet.

What This Means in Practice for NOC Leaders

In well-run NOCs, LLMs tend to help in practical ways. Analysts spend less time pulling context together, escalations are clearer, and incident handling becomes more consistent without growing teams.

In less mature environments, the outcome can be very different. LLMs may blur ownership or slow response during critical moments. When that happens, it’s usually not the technology—it’s a lack of operational readiness.

This is where Jade focuses its approach. Instead of starting with the model, the emphasis is on how the NOC operates today. LLMs are introduced only where processes, data quality, and escalation paths are strong enough to support them.

The line between what people handle and what the AI supports is defined early, and governance isn’t bolted on later. What matters in the end is whether day-to-day operations actually improve, incidents close faster, escalations are clearer, and analysts spend less time chasing context. When those things show up, teams move past experimentation and into steady improvement, without taking on extra risk.

Conclusion

LLMs have the potential to significantly improve the efficiency and effectiveness of NOC operations. However, they are not a silver bullet. Understanding what the limitations of LLMs in IT operations are is just as important as understanding their benefits.

As LLM technology continues to evolve, they are more likely to play a supporting role—augmenting analysts within structured network operations center automation models—rather than replacing human expertise altogether.

Is your NOC ready for AI-assisted operations? Jade Global works with teams to modernize operations, reduce alert noise, and apply AI in network operations responsibly, introducing LLMs where they make sense and avoiding them where they don’t. Get a Custom Network & NOC Readiness Assessment