The Meaning of Shifting Left on Cloud for Technology Companies

You must have heard the term “shift left” from consultants pitching DevOps practices to modernize your software development and operations tool chain and processes. People often claim shifting left reduces complexity in your IT organization without specific reference to what, or a clear explanation of how.

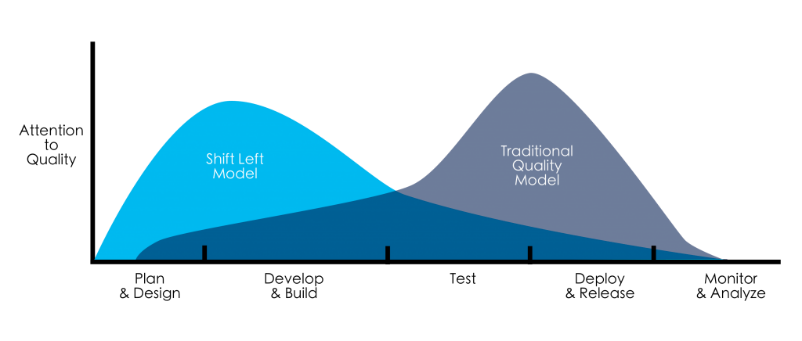

Upon further probing, you might find two distinct references – shift left testing and shift left cloud security. Shift left testing is easier to understand when contrasted with the traditional software development process. In the conventional cycle, the focus on quality is higher after the build phase is over and during the testing and deployment phases. In shift left testing, however, quality is built into the development process. Instead of waiting for problems to be discovered late in the stage, causing potential redesign and delays, in this model, testing is involved earlier in the process, resulting in quality control built-in throughout the process.

Shift Left is about doing things earlier in the development cycle. Source: van der Cruijsen 2017

Another dimension of the shift left principle is shift left security. Until recently, the security hardening process came very late in the stage during software deployment, release, and monitoring. This was not only due to the separation of control between teams, but also due to the separation of responsibilities. Since security issues come into play once your application code starts running on your infrastructure, security during development was mainly an afterthought. Today, since Cloud Computing has become synonymous with Enterprise IT and the permissioned application teams can now provide the infrastructure by executing a code and having the execution go live in seconds, security cannot be left until the last minute.

Using Infrastructure-as-code to provision execution environments declaratively, and container platforms such as Kubernetes to dynamically provision workloads, today, organizations have tremendously improved the productivity of their teams and the velocity of the feature releases. This, however, also means complexity in securing the workloads and the environments they run on also increased, just the focus has shifted. This increased complexity is one of the significant challenges COOs/CTOs face today as they move their workloads into the cloud in droves.

As you plan to move your enterprise workload onto the cloud, it is paramount to understand the technology drivers behind this shift left of testing and security. To build in security hardening and quality control early in the process, there are four key elements you need to consider in your DevSecOps pipeline.

Static Analysis of Cloud Security and Software Composition

Analyzing your code as developers build the modules provides immediate feedback on any vulnerabilities in the code. Vulnerabilities can be caused by something simple such as a developer opening a connection to a backend database and not closing it, or something riskier such as using hardcoded authentication credentials, or third-party libraries in the code that are known for security issues. Tools that you can use include open-source tools such as OWASP Dependency Check or OWASP Dependency Track that can look for unsafe libraries using the National Vulnerability Database and you can also integrate with external APIs to provide an aggregated view of all vulnerabilities. There are commercial products such as SonarQube that provide quality checks in addition to security vulnerabilities, resulting in production-ready code in your code repository branches.

While you can integrate one or more of these tools in your CodeBuild pipeline, in many cases, you can also improve your code quality using ML-driven insights by using Amazon CodeGuru Reviewer. CodeGuru brings decades of safe software engineering best practices to your fingertips, saving you valuable time in human code reviews and fixes.

Dynamic Analysis of Security State and Runtime

Dynamic Security Analysis provides an outside-in approach to testing your applications for loopholes that can be exploited and that were not detectable in static pre-build analysis. Ideally, you’d integrate the Dynamic Application Security Testing (DAST) tool so that after static analysis, an optional manual review passes, the code is deployed onto your target environment, and it will trigger DAST scanning.

OWASP’s Zed Attack Proxy (ZAP) tool is one of the popular web security tools that automatically finds vulnerabilities in your web-facing resources. ZAP is available as containerized Docker images and has an automation framework that allows you to integrate it with any build pipeline. Using ZAP, in addition to performing the basic passive scan, you can add several active scanning plugins to perform access control testing, testing against JavaScript, or SQL injection vulnerabilities, port scanning for possible penetration testing, and so on.

For static and dynamic code analysis, you might want to look at all the findings through a single pane of glass to take remediating actions before moving to production. With a DevSecOps pipeline built on AWS, you can use AWS Security Hub to aggregate all the findings and use AWS Lambda-based triggers to automate remediation actions

Continuous Monitoring of Execution Platforms and Cloud Shifting Method

As is now well-known in cloud security circles, it really does take a village to secure your workloads on cloud. Cloud security is a complex responsibility that doesn’t end with validating application code to be secure, you need to keep your data and applications on continuous watch. That’s where the runtime scanning of applications and continuous scanning of your OS and container images come into play.

Runtime Application Self-Protection (RASP) has become a key component in cloud security strategy, with a razor-sharp focus on the internals of an application using embedded sensors. Data collected from these sensors are then combined with contextual information about code, configurations, and control flows to identify breaches or other high-risk activities and runtime conditions. RASP tools such as those from Imperva and Signal Sciences provide two main functionalities, detection and blocking of malicious activities within application runtime and threat intelligence, producing insights on attack vectors, their methods, and motivations.

Detective Control and Prevention

Often, runtime self-protection gets complimented by detective control applied at an external network level, in the form of a Web Application Firewall (WAF). This type of detection works at the HTTP level by monitoring inbound traffic, analyzing threats, and blocking them before they reach the application, thus, also known as perimeter control.

Most RASP tool providers also provide WAF capability. In addition, for your web-facing applications running on AWS, you could also use AWS WAF. Which helps protect your public-facing sites and API endpoints against bot attacks, SQL injections, and Cross-site scripting-type attacks by using a set of filters. AWS WAF provides flexible API integration with other marketplace solutions to address issues like OWASP top 10 security risks and such.

Conclusion

Keep in mind, that shifting left is not only about using these tools, while the tools are essential, it’s more about the people and culture shift. For starters, this requires adopting a quality first mindset. While an organization might start with having security representation early in their development process, eventually, teams must be built around a culture that promotes ownership, where individuals are aware of all stages in the software lifecycle.

To successfully develop a process for implementing shifting left, you need teams that can identify security gaps and shortcomings in your existing toolchain and collaborate to discuss real-world risks, using the common insight derived to drive decisions around selecting packages, writing functions, running tests, and deploying on an environment. Additionally, teams must embrace automation in all aspects and choose the right tools for Continuous Integration, Test Automation, Issue Tracking, Environment Scanning, and Code Deployment.

At Jade, all our service offerings are built around teams that live by this culture of collaboration and ownership. We make the quality and security of whatever you choose to develop our top priority, so much so that we include dedicated security and quality personnel in all our turn-key solutions offerings. Be it your Salesforce or SAP implementation, Infrastructure services, or digital native development, we’re here to help you.

Visit our website to learn more about our services: www.jadeglobal.com/technology-advisory