Snow Park Technologies and Benefits of Snowpark

Introduction: What is Snowpark?

Today, programming languages like Java, Scala and python (snowflake snowpark python) are prevalent. They’re used in a variety of applications, both large and small, including enterprise software and web development. This popularity brings a rich ecosystem of packages (standard library included) that allow for data science and analysis and act as a foundational element.

When you’re working with large-scale datasets and analytics, you need more flexibility than is offered by traditional relational databases. You need access to the exact data that you need to run your functions, without having to load everything else into memory. This has all kinds of implications for systems administration—for instance, when you change your functions or migrate them to newer versions. You need a system that is easy to administer and maintain.

You also want easier access to the data that matters most—the exact data you need at that moment. You want to use familiar programming languages and environments—Java, Python (snowflake snowpark python) and Scala—to access that data without having to work around impedance mismatches between the two worlds.

Benefits of Snowpark:

Snowflake’s Snowpark brings these capabilities together in one comprehensive solution. Accessing your Snowflake data using Snowpark, is just easier, streamlined, and more effective.

Snowflake’s Snow Park Technologies provides a model that abstracts the database and allows using all the languages, environments, and frameworks of choice. It is designed to ease maintenance, facilitating a simpler, yet more intelligent environment that requires less administrative work and greater user access to the data they need, ensuring efficiency in delivery.

It lets developers collaborate on data in coding languages and constructs, familiar to them, making it easier for developers. The Snowflake’s Snowpark API is a revolutionary way of thinking about programmability for the Snowflake Data Cloud. This helps bring data scientists, data engineers, and application developers on the same platform making it easier for them to collaborate and streamline their data architecture.

If we include the fact that we can scale Snowflake up and down on-demand, in comparison to having a single dedicated server, we are saving on any compute that we are not using throughout the day.

Snowpark Integration:

Snowpark is natively integrated into the Snowflake engine so users can have all the advantages of security, governance, and manageability benefits.

Benefits of Snowpark:

Developers can build complex data pipelines using Snowflake’s Snowpark API that will be pushed to the snowflake engine for execution after conversion to SQL. Snowpark operations are executed lazily on the server, which reduces the amount of data transferred between your client and the Snowflake database

Snowflake’s Snowpark provides additional enhancement features where the reusability of code can be increased by a higher degree by letting developers write a function to implement complex logic in the language used for Snowflake API. You can write functions in Snowflake's native language, SQL, and push them down to the database for execution. The function can operate on the data in the database without moving the data first.

Snowpark has also expanded its Java function support to include table functions, across all supported cloud providers. This means now within Snowflake’s Snowpark, there is a possibility and use cases. This is a marked movement from only being a Scalar functions’ support (that work every single row separately). This helps developers perform complete functions like facilitating a single uniform result for a set of rows or conserving a specific state through several rows.

Accessing your Snowflake data using Snowpark

In today's age, most of the data streaming live is in an unstructured format. Snowpark has raised its bar by allowing developers to access and process unstructured data directly with Snowflake data cloud using Snowpark. This means you are accessing your Snowflake data using Snowpark, without any hiccups.

Trying to make sense of unstructured data using traditional SQL is like trying to make sense of the world by looking through a pinhole in a board: you can see some things, but not others. The ability to read unstructured data is a big step forward for data analysis. The ability to access deep subsets of data, including metadata and EXIF, directly from Snowflake can help users speed up the delivery of new products and services, or boost the efficiency of existing ones.

By adding table functions, Snowflake’s Snowpark gives users a way to see the whole picture. They can see what EXIF data looks. Accessing your Snowflake data using Snowpark also makes developers' jobs easier - letting them do what they want without having to worry about how to get the data out of unstructured formats. Snowpark smoothly masters transformation of information for multiple use cases including deconstructing invoices, files etc.

With Snowflake data cloud, we also get a new logging framework available in private preview, that can be called from within Snowpark. This improves development productivity with easier monitoring and debugging among some of the use cases making code more productive.

Setting up Development Environment for Snowpark:

We can use various environments to develop applications –

- Application development tools (like Visual Studio Code or IntelliJ IDEA Community Edition)

- Read-eval-print loop (REPL) interactive shells (like the sbt REPL or the Scala REPL)

- Jupyter notebooks

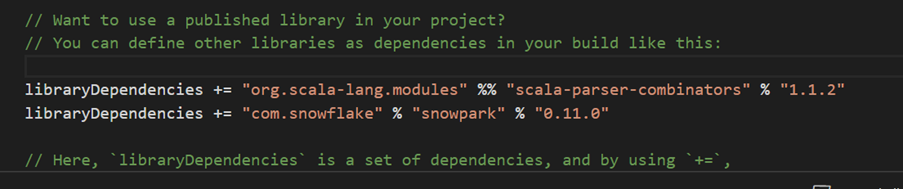

Snowpark library that is needed:

Sample Code to connect to Snowflake

import com.snowflake.snowpark._

import com.snowflake.snowpark.functions._

object Main {

def main(args: Array[String]): Unit = {

// Replace the below.

val configs = Map (

"URL" -> "https://.snowflakecomputing.com:443",

"USER" -> "",

"PASSWORD" -> "",

"ROLE" -> "",

"WAREHOUSE" -> "",

"DB" -> "",

"SCHEMA" -> ""

)

val session = Session.builder.configs(configs).create

session.sql("show tables").show()

}

}

|

Conclusion

Snowflake’s Snowpark is the perfect coalescing of SQL and Spark allowing running complex data processing jobs in the Snowflake Data Cloud and enabling data engineers, data scientists, and developers who prefer other programming languages to take advantage of Snowflake’s powerful platform capabilities and Snowflake data cloud. So, whether it is Snowpark’s integration into Snowflake or enabling data experts by accessing your Snowflake data using Snowpark, your data now has become your greatest power tool.